Ph.D. Candidate at Fudan University

About Me

I am a Ph.D. candidate of School of Computer Science at Fudan University, advised by Prof. Li Shang (opens new window).

My research interests include representation learning, dynamic neural networks, and vision-language models. I am also interested in the applications of these techniques in self-supervised learning and continual learning.

News

- 📌 [2/2023] One paper titled "Over-parameterized Model Optimization with Polyak-{\L}ojasiewicz Condition" (opens new window) got accepted in International Conference on Learning Representations (ICLR23).

- 📌 [2/2022] One paper titled "Recursive Disentanglement Network" (opens new window) got accepted in International Conference on Learning Representations (ICLR22).

Education

-

Ph.D. Candidate, School of Computer Science, Fudan University. 2020.9 - Present

Advisor: Prof. Li Shang (opens new window)

-

MSc., School of Engineering Science, University of Chinese Academy of Sciences 2017.9 - 2020.6

Advisor: Prof. Jie Sui

Research Interests

- representation learning (un- or semi-supervised)

- dynamic neural networks

- vision-language model

Publications

-

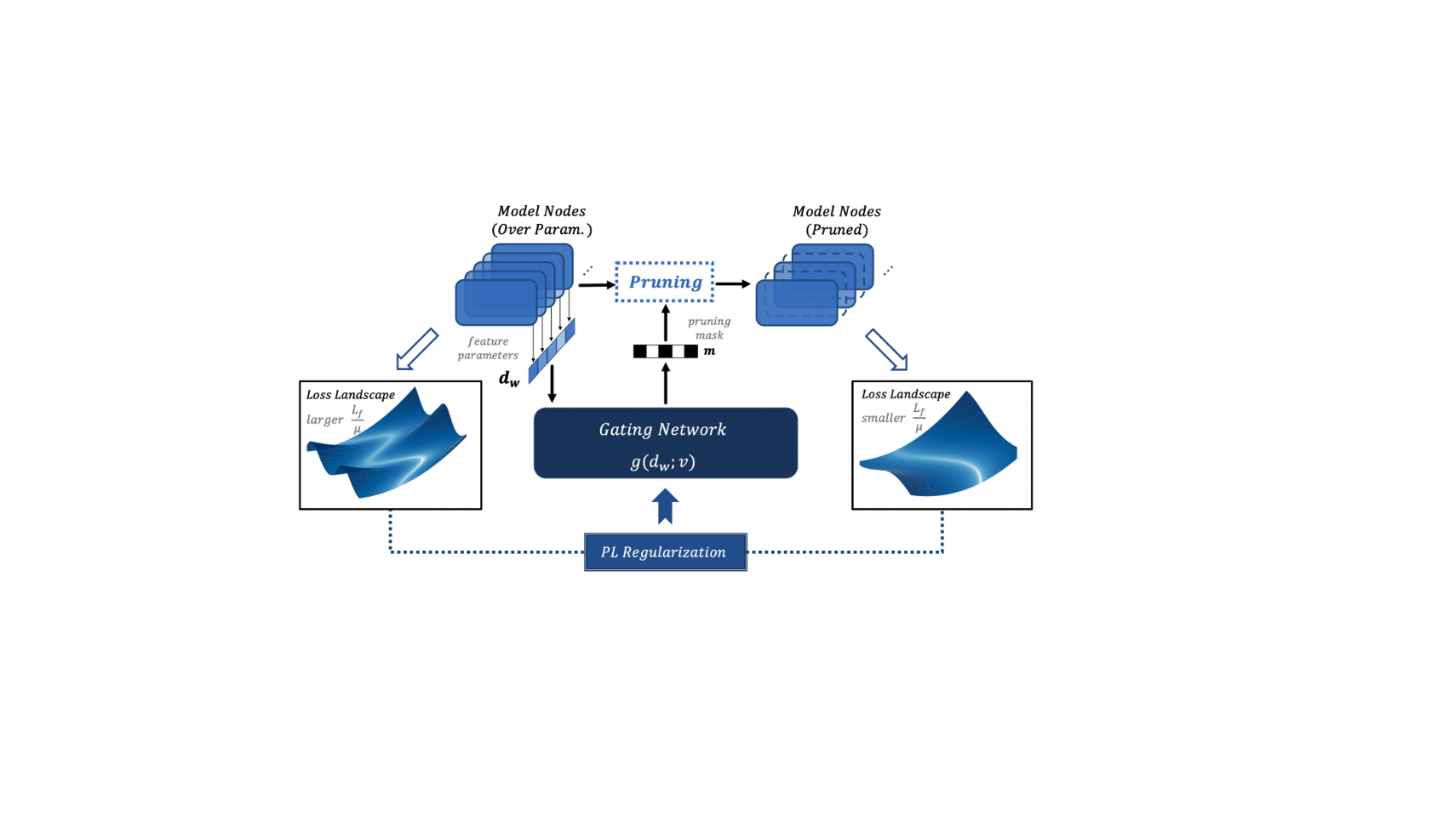

[ICLR23] Over-parameterized Model Optimization with Polyak-Łojasiewicz Condition.

Yixuan Chen, Yubin Shi, Mingzhi Dong, Xiaochen Yang, Dongsheng Li, Yujiang Wang, Robert Dick, Qin Lv, Yingying Zhao, Fan Yang, Ning Gu, and Li Shang.

We first theoretically analyze convergence and generalization abilities of over-parameterized models can be upper-bounded by the ratio of the Lipschitz constant and the Polyak-Łojasiewicz (PL) constant (abbreviated as the condition number). Based on the discoveries, we propose a structured pruning method with a novel pruning criterion. That is, we devise a gating network that dynamically detects and masks out those poorly-behaved nodes of a deep model during the training session. To this end, this gating network is learned via minimizing the condition number of the target model, and this process can be implemented as an extra regularization loss term.

[PDF]

-

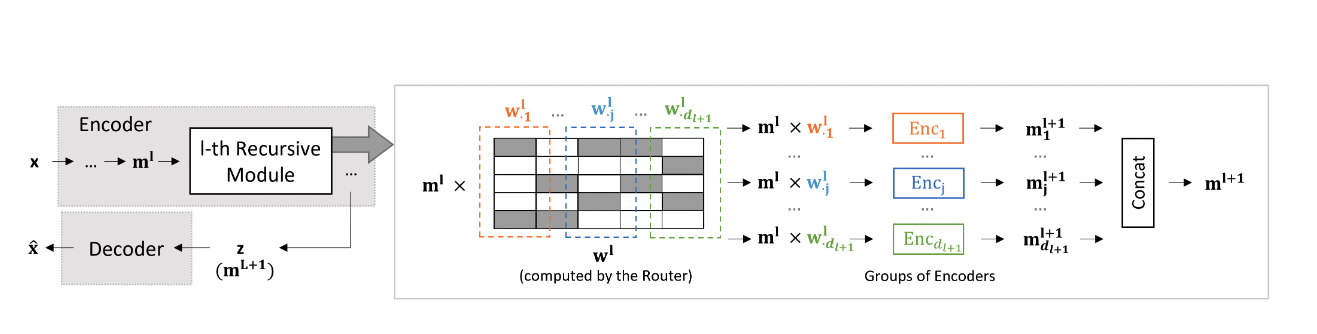

[ICLR22] Recursive Disentanglement Network

Yixuan Chen, Yubin Shi, Dongsheng Li, Yujiang Wang, Mingzhi Dong, Yingying Zhao, Robert Dick, Qin Lv, Fan Yang, and Li Shang

Disentangled feature representation is essential for data-efficient learning. The feature space of deep models is inherently compositional. Existing VAE-based methods, which only apply disentanglement regularization to the resulting embedding space of deep models, cannot effectively regularize such compositional feature space, resulting in unsatisfactory disentangled results. In this paper, we formulate the compositional disentanglement learning problem from an information-theoretic perspective and propose a recursive disentanglement network (RecurD) that propagates regulatory inductive bias recursively across the compositional feature space during disentangled representation learning.

[PDF]

-

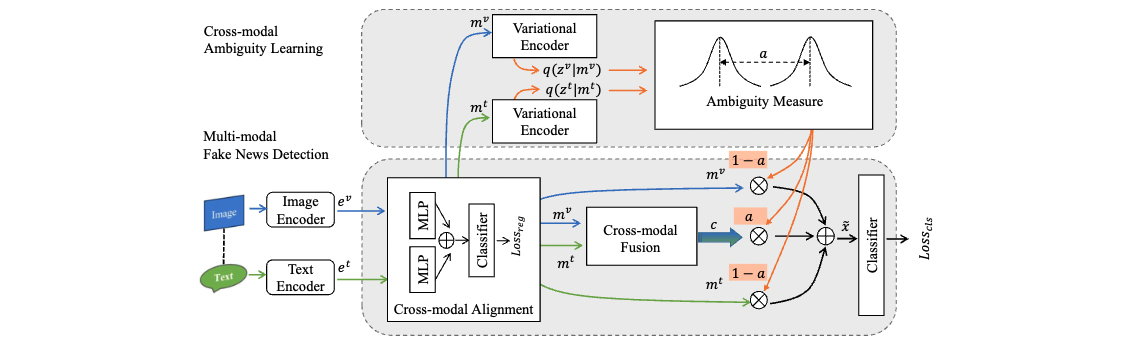

[WWW22] Cross-Modal Ambiguity Learning for Multimodal Fake news Detection

Yixuan Chen, Dongsheng Li, Peng Zhang, Jie Sui, Qin Lv, Tun Lu, and Li Shang

Cross-modal learning is essential to enable accurate fake news detection due to the fast-growing multimodal contents in online social communities. A fundamental challenge of multimodal fake news detection lies in the inherent ambiguity across different content modalities, i.e., decisions made from unimodalities may disagree with each other, which may lead to inferior multimodal fake news detection. To address this issue, we formulate the cross-modal ambiguity learning problem from an information-theoretic perspective and propose CAFE — an ambiguity-aware multimodal fake news detection method. CAFE improves fake news detection accuracy by judiciously and adaptively aggregating unimodal features and cross-modal correlations, i.e., relying on unimodal features when cross-modal ambiguity is weak and referring to cross-modal correlations when cross-modal ambiguity is strong.

[PDF]

Awards & Honors

- 2020 Outstanding Graduate of Beijing

- 2020 Outstanding Graduate of University of Chinese Academy of Sciences

- 2019 National Scholarship